Setting up an HA NAS with Supermicro

Description

Dual-Controller NAS vs. Storage Server:

As we discussed, this SSG-221E-DN2R24R unit is essentially a storage server with an integrated "dual-controller" architecture (both nodes). It offers high reliability and availability for file storage with a relatively simple configuration compared to building an HA cluster from scratch with standard servers, taking advantage of its SBB design.

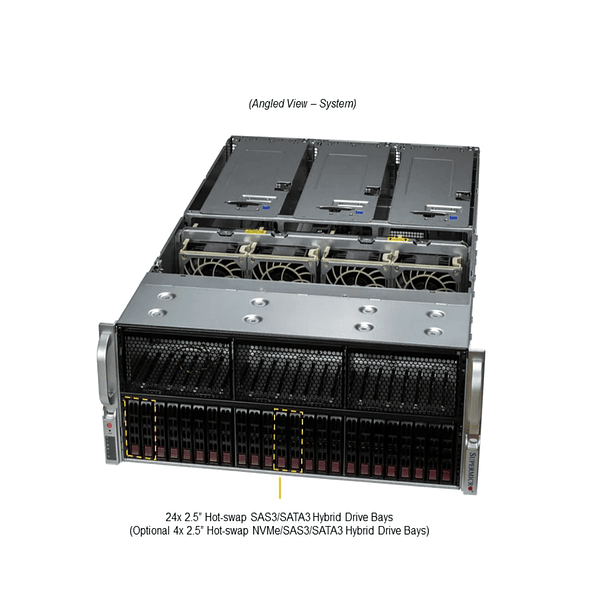

The Supermicro SSG-221E-DN2R24R is ideal for setting up a high-availability (HA) NAS thanks to its Storage Bridge Bay (SBB) design, featuring two independent nodes sharing 24 NVMe PCIe Gen5 bays. This means it's already prepared for hardware redundancy.

Here is a detailed configuration for an HA NAS with this unit, considering the main components and the necessary software:

1. Physical Components (Hardware):

- Supermicro SSG-221E-DN2R24R: This is the foundation of our solution. It contains:

- Two independent server nodes (Dual Node): Each node is a complete server with its own CPU (4th/5th Gen Intel Xeon Scalable), RAM (up to 2TB DDR5 per node), and network ports (2x 10GbE per node).

- 24 dual-port U.2 NVMe Gen 5 bays: Critical for high availability, as each NVMe drive is accessible by both nodes.

- Redundant power supply (2x 2000W Titanium): To ensure the system continues to operate even if one power supply fails.

- 1GbE private Ethernet connection for inter-node communication (Heartbeat): Essential for synchronization and fault detection in the HA cluster.

- NVMe drives:

- Type: Dual-port NVMe U.2 PCIe Gen 5. Dual-port drives are essential so both nodes can access them simultaneously. The quantity and capacity will depend on your storage requirements.

- RAID Configuration: Although the drive allows access to all disks from both nodes, you'll need a software RAID (or ZFS) solution to create redundant storage volumes. Consider configurations like RAID 10 or RAID 6 for a good balance between performance and data protection.

- RAM:

- For each node, it's recommended to configure an appropriate amount of DDR5 ECC RAM. The datasheet mentions up to 2TB per node, but the actual amount will depend on the workload and the NAS operating system/software you use. For an HA NAS, it's prudent to have at least 64GB–128GB per node, and more if you plan to use deduplication, compression, or virtualization.

- Network Adapters (NICs):

- Each node already comes with two integrated 10GbE ports (Intel X710). These are excellent for NAS data traffic.

- For increased redundancy and bandwidth, you may want to consider adding additional PCIe 5.0 cards (e.g., 25GbE or 100GbE) if network performance is critical. The unit has 2x PCIe 5.0 x16 HHHL slots and 2x PCIe 5.0 x8 HHHL slots per node for expansion.

- Be sure to configure bonding or link aggregation (LACP) for the network ports on each node that connect to the main network, providing redundancy and increased bandwidth.

- Management Connectivity:

- Each node has a dedicated 1GbE LAN port for the Baseboard Management Controller (BMC) (IPMI). This is crucial for remote management, monitoring, and configuring STONITH (Shoot The Other Node In The Head), an essential fencing technique in HA clusters.

2. Software for NAS HA:

For a high-availability NAS on this platform, the most common and robust options are:

- Base Operating System (per node):

- Linux (Recommended): Distributions such as Ubuntu Server LTS, Red Hat Enterprise Linux (RHEL), CentOS Stream, or SUSE Linux Enterprise Server . They are flexible, stable, and offer excellent support for high-availability solutions.

- FreeBSD: If you prefer a more BSD-like environment, you might consider FreeNAS/TrueNAS CORE or SCALE. TrueNAS SCALE is an interesting option due to its Linux base and container/VM support.

- Storage and High Availability Solution:

- ZFS (Zettabyte File System) + Pacemaker/Corosync (Recommended for Linux):

- ZFS: This is the de facto file system for high-performance and reliable storage solutions. It allows you to create storage pools on NVMe storage, with features such as data integrity checksums, snapshots, clones, compression, deduplication, and RAID levels (RAIDZ, RAIDZ2, RAIDZ3).

- Pacemaker and Corosync: These are the key components for managing high-availability clusters on Linux.

- Corosync: Provides the heartbeat and communication between nodes, detecting failures and maintaining quorum.

- Pacemaker: This is the cluster's resource manager. It monitors the status of services (e.g., NFS, Samba, iSCSI), ZFS volumes, and orchestrates automatic failover in the event of a node failure.

- DRBD (Distributed Replicated Block Device) + Pacemaker/Corosync (Alternative):

- DRBD replicates block-level data between the two nodes in real time, creating a logical mirror device. If one node fails, the other assumes ownership of the device. This is a different approach than ZFS, but also very robust.

- TrueNAS SCALE (Based on Linux/FreeBSD with ZFS):

- TrueNAS SCALE is a Debian Linux-based NAS distribution that incorporates ZFS and provides a unified management interface for configuring storage, services, and HA. It greatly simplifies ZFS configuration and cluster management.

- Third-party software-defined storage (SDS) solutions: Some solutions like Xinnor xiRAID or Storage Spaces Direct (Microsoft) for Windows Server environments (although the SSG-221E-DN2R24R is more optimized for Linux). Supermicro has a specific white paper for the SSG-221E-DN2R24R with xiRAID.

3. Key Steps for Configuration:

- OS Installation: Install the same operating system (e.g., Ubuntu Server LTS) on both nodes. Make sure to install it on a separate boot drive (e.g., the internal M.2 NVMe drives if you're using them, or a small NVMe drive for the OS) to avoid occupying the main data bays.

- Network Configuration:

- Configure 10GbE network interfaces for data traffic and create a bond (LACP) for redundancy and performance.

- Configure the dedicated 1GbE interface as a private "heartbeat" network between the two nodes.

- Configure the IPMI (BMC) interfaces for remote management and STONITH. Ensure the IPMIs can communicate with each other.

- Storage Configuration (ZFS):

- On each node, it detects the 24 dual-port NVMe drives.

- Install ZFS on both nodes.

- Create a shared ZFS pool. Dual-port NVMe drives allow both nodes to see the same drives, but only one node can have the pool actively mounted and exported at a given time in an active-passive cluster setup.

- Create the necessary datasets and zvols within the ZFS pool.

- HA Cluster Configuration (Pacemaker/Corosync):

- Install Corosync and Pacemaker on both nodes.

- Configure Corosync for cluster communication, using the dedicated heartbeat network.

- Configure Pacemaker to manage NAS resources:

- Defines the ZFS pool as a resource that can be mounted on one node at a time.

- Configures network services (NFS, Samba/CIFS, iSCSI) as resources dependent on the ZFS pool.

- Defines a floating IP address (Virtual IP) for the NAS, which will move between nodes in case of failover.

- Configure STONITH (fencing): This is critical. It uses Supermicro's IPMI (fence_ipmilan) to ensure that if one node fails, the other can force it to shut down to avoid a "brain split" (both nodes trying to write to the same disks simultaneously).

- Failover and Failback Testing:

- Once configured, perform extensive failover testing (shutting down a node, disconnecting the network, etc.) to ensure the other node successfully takes over and that services are restored without significant disruption.

- Test failback to ensure the original node can rejoin the cluster and resume services if necessary.

Additional Considerations:

- NVMe Performance: PCIe Gen5 NVMe drives deliver exceptional performance. Make sure your network and client applications can take advantage of this bandwidth.

- Monitoring: Implement a robust monitoring system (e.g., Prometheus + Grafana, Zabbix) to monitor the health of NAS nodes, storage, network, and services.

- Backups: Even with HA, redundancy is no substitute for backups. Implement a regular backup strategy to protect your data from logical corruption, human error, or major disasters.

- Super Latam: Remember that for Chile, Super Latam is the most important authorized Supermicro distributor. They can provide you with components, support, and specific technical advice for this configuration.

Dual-Controller NAS vs. Storage Server:

As we discussed, this SSG-221E-DN2R24R unit is essentially a storage server with an integrated "dual-controller" architecture (both nodes). It offers high reliability and availability for file storage with a relatively simple configuration compared to building an HA cluster from scratch with standard servers, taking advantage of its SBB design.

You might be interested in these