Supermicro Servers for Machine Learning: A Detailed Analysis of Solutions and Capabilities

Description

Executive Summary – Supermicro Servers for Machine Learning

Supermicro at a Glance

Supermicro has become a comprehensive IT solutions provider, with strong leadership in Machine Learning (ML), AI, HPC, cloud, storage, and 5G/Edge infrastructures. Its guiding principle is “Performance, Efficiency, and Fast Time-to-Market”, ensuring organizations can adopt advanced AI/ML technologies rapidly.

Strategic Strengths

- Modular Architecture: Building Block Solutions® + Resource-Saving Architecture = extreme customization, scalability, and long-term efficiency.

- Energy & Sustainability: Direct Liquid Cooling (DLC) reduces energy use by up to 40%, increases compute density per rack, lowers TCO, and reduces environmental impact (TCE).

- Proven Performance: In MLPerf v5.0, Supermicro systems delivered 3× higher token/sec generation vs. prior gen.

-

GPU Leadership: Support for the broadest set of accelerators:

- NVIDIA: A100, H100, B200, L40s, L4, RTX Pro 6000 Blackwell.

- AMD Instinct: MI300X, MI350 series (with 288 GB HBM3e each, 40% more tokens-per-dollar).

- Intel: Data Center GPU Flex series.

- Future-Ready Innovation: Early adoption of next-gen GPUs (e.g., NVIDIA B200, AMD MI350, Intel Flex) keeps clients at the cutting edge.

Product Portfolio for ML

-

GPU Servers:

- 8U/10U systems for large-scale AI training & HPC (up to 8 GPUs per node).

- 5U systems with up to 10 double-width GPUs.

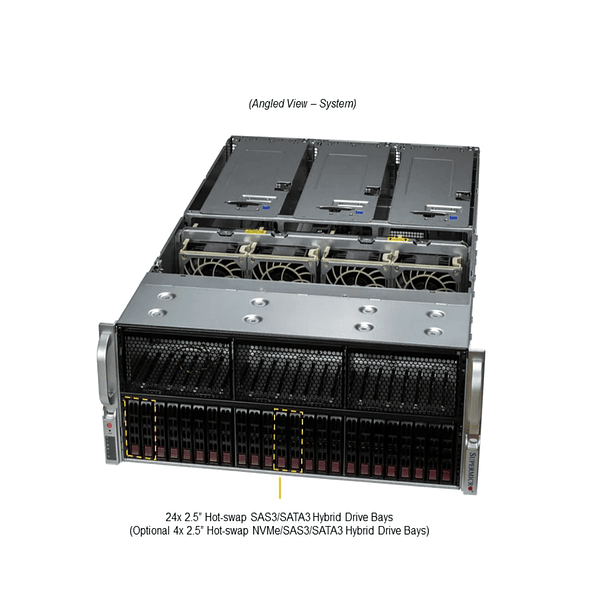

- 4U/2U/1U systems for balanced compute or edge inference.

- Twin/Multi-node: BigTwin®, GrandTwin®, FlexTwin™, FatTwin® — maximize density, efficiency, and shared resources.

- Blade Servers: SuperBlade® (high performance), MicroBlade® & MicroCloud (highest density & efficiency).

- Storage Systems: All-Flash NVMe, Petascale Grace (up to 983TB in 1U), Top-Loading, JBOF/JBOD enclosures.

- Edge & Telecom: Rugged, compact GPU servers for low-latency inference, 5G, IoT, and smart cities.

- SuperWorkstations: Developer platforms with air or liquid cooling, supporting NVIDIA H100/RTX Pro 6000.

Key Technology Differentiators

- CPUs: AMD EPYC (up to 192 cores) and Intel Xeon (5th Gen with Intel AMX, +339% on ML benchmarks).

- Memory & Storage: Up to 6–12 TB DDR5 + GPUs with 2.3 TB HBM3e per system. Ultra-fast NVMe (PCIe 5.0).

- Networking: End-to-end 400GbE/InfiniBand, GPU-to-NIC 1:1 design to eliminate bottlenecks.

- Cooling: DLC systems with 80 kW D2C cooling per rack, redundant pumps/PSUs, leak-proof connectors.

Software & Ecosystem

- Frameworks: TensorFlow, PyTorch, Scikit-learn, Caffe fully supported.

- NVIDIA Stack: CUDA, cuDNN, TensorRT, NVIDIA AI Enterprise certified.

- AMD ROCm 7: Open-source GPU acceleration, optimized PyTorch support, FP4/FP6 precision.

-

MLOps & Orchestration:

- Kubernetes + OpenShift for containerized ML.

- Partnerships with Hopsworks & Pure Storage (GenAI Pod) for end-to-end AI pipelines.

- Supermicro’s own tools: SuperCloud Composer, Orchestrator, Server Manager, SuperDoctor® 5.

- OS Compatibility: Linux (Ubuntu, Debian, Arch, OpenSUSE), Windows, and even macOS virtualization.

ML Use Cases & Applications

- Training & Inference: LLMs, Generative AI, Conversational AI.

- HPC: Climate modeling, scientific research, drug discovery.

- Enterprise Analytics: Fraud detection, anomaly detection, ERP/databases.

- Edge AI & IoT: Retail, manufacturing, healthcare, smart cities.

- Graphics/Media: Cloud gaming, 3D rendering, VDI, streaming.

Availability & Local Support (Chile)

-

Authorized Distributors:

- Super Latam (superlatam.cl)

-

Evaluation Programs:

- JumpStart → remote access to AMD EPYC servers.

- Proof of Concept (POC) → validation on AMD Instinct GPU servers.

Bottom Line

Supermicro delivers end-to-end ML infrastructure that is:

- High-performance (up to 15× inference and 3× training boosts over prior gen).

- Sustainable (40% energy savings via DLC).

- Flexible (multi-vendor GPU/CPU support, modular building block design).

- Proven (certified with NVIDIA, AMD, Intel ecosystems, plus global case studies).

For enterprises, research institutions, and startups in Chile and worldwide, Supermicro offers the most competitive cost-performance AI/ML servers, scalable from edge inference to full AI factories and SuperClusters.

You might be interested in these