Supermicro Servers Recommended for Artificial Intelligence: A Comprehensive Analysis of AI Infrastructure

Description

Executive Summary – Supermicro AI Infrastructure Leadership

Supermicro at a Glance

Supermicro is a leading provider of AI-optimized server solutions, with a broad portfolio that supports the full AI lifecycle: training, inference, and HPC workloads. Its “Building Block Solutions®” philosophy delivers unmatched customization, rapid deployment, and optimized Total Cost of Ownership (TCO).

Strategic Strengths

- GPU Leadership: Full support for NVIDIA (H100, H200, Blackwell) and AMD Instinct (MI300X, MI300A, MI350) accelerators, with high-bandwidth memory (HBM3/3e up to 288 GB/GPU).

- Future-Proof Innovation: Early adoption of next-gen GPUs and co-design partnerships with NVIDIA & AMD.

- Advanced Cooling: Direct Liquid Cooling (DLC) reduces power by up to 40%, enables higher density, and lowers TCO.

- Scalability: SuperCluster architecture delivers pre-validated, rack-scale AI systems, scaling from 256 GPUs per unit to thousands of nodes with 400GbE/InfiniBand networking.

- Flexibility: Modular design allows mixing Intel Xeon & AMD EPYC CPUs, DDR5 memory up to 9TB, NVMe flash, and customizable NICs for GPU-to-network 1:1 optimization.

- Total IT Solution Provider: Beyond hardware, Supermicro delivers design, manufacturing, validation, deployment, and management software in a single vendor model.

Portfolio Highlights

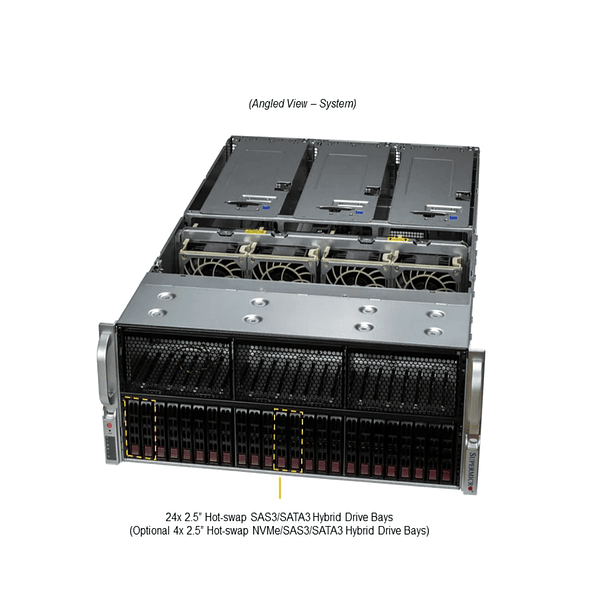

- GPU Servers (8U/10U, 4U, 2U, 1U) → for LLM training, generative AI, and HPC.

- Twin / Multi-Node Systems (BigTwin®, GrandTwin®, FlexTwin™) → high density, distributed AI.

- Edge & Telecom AI Systems → compact, ruggedized for real-time inference & 5G.

- SuperWorkstations → GPU-accelerated developer platforms.

- High-Performance Storage → All-Flash NVMe and Petascale Grace for massive AI datasets.

Software Ecosystem

- NVIDIA CUDA stack (CUDA, cuDNN, TensorRT, AI Enterprise) with certified Supermicro GPU systems.

- AMD ROCm 7 with PyTorch integration, mixed precision, FP4/FP6 support for next-gen efficiency.

- TensorFlow & PyTorch fully supported across platforms.

- Supermicro Software Tools (SuperCloud Composer, Server Manager, SUM, SuperDoctor® 5) for monitoring, orchestration, and automation.

Scalability & Efficiency

- SuperCluster Units: 32 servers × 8 GPUs = 256 GPUs per scalable unit, expandable to enterprise AI factories.

- Global Production: Up to 5,000 racks/month (2,000 liquid-cooled) delivered worldwide.

- Operational Savings: Up to 71% power reduction, 67% lower 3-year TCO, 87% server reduction with optimized AMD + Supermicro deployments.

Real-World Adoption

- Enterprise AI & Startups: Chosen for cost-performance advantage vs. Dell, HPE, Lenovo.

- LLM & Generative AI Leaders: Supermicro systems powering AI factories and SuperCluster deployments.

- Research & Edge: Supermicro enabling HPC, edge AI, and telecom AI rollouts globally.

Recommendations for CIOs & AI Leaders

- For large-scale training/LLMs → adopt 8U/10U GPU servers or SuperCluster for maximum GPU density.

- For inference & graphics workloads → 4U/5U GPU systems (up to 10 GPUs).

- For edge AI → compact GPU servers or fanless edge platforms for low-latency inference.

- For R&D → liquid-cooled SuperWorkstations with NVIDIA H100/RTX Pro 6000.

- For long-term TCO optimization → adopt DLC-enabled systems for sustainability and efficiency.

Bottom Line

Supermicro positions itself not just as a server vendor but as a Total AI Infrastructure Partner. With a full portfolio from edge to supercluster, support for NVIDIA & AMD ecosystems, and innovations in cooling, scalability, and cost efficiency, Supermicro delivers a competitive, future-ready platform for enterprises, hyperscalers, and startups driving the next wave of AI.

You might be interested in these