Installation and operation of a Supermicro AS-4125GS-TNRT2 server equipped with 4 NVIDIA H100 NVL 94GB GPUs

Good Practices for Installation

1. Prior Planning

- Feeding Requirements:

- NVIDIA H100 NVL 94GB GPUs are high-power components. The AS-4125GS-TNRT2 features four redundant 2000W (2+2) Titanium-level power supplies. Ensure your data center's electrical infrastructure can handle the full load.

- Consider the maximum power the server can consume under full load (CPU, RAM, disks, and especially the 4 H100 NVL GPUs).

- Cooling Requirements:

- This server is designed to handle high thermal loads thanks to its eight heavy-duty hot-swap fans. However, cooling the rack and data center is crucial. Ensure adequate airflow and a controlled ambient temperature (between 10°C and 35°C, ideally in the lower range for optimal performance).

- Consider the rack layout to optimize airflow (cold air intake at the front, hot air exhaust at the rear).

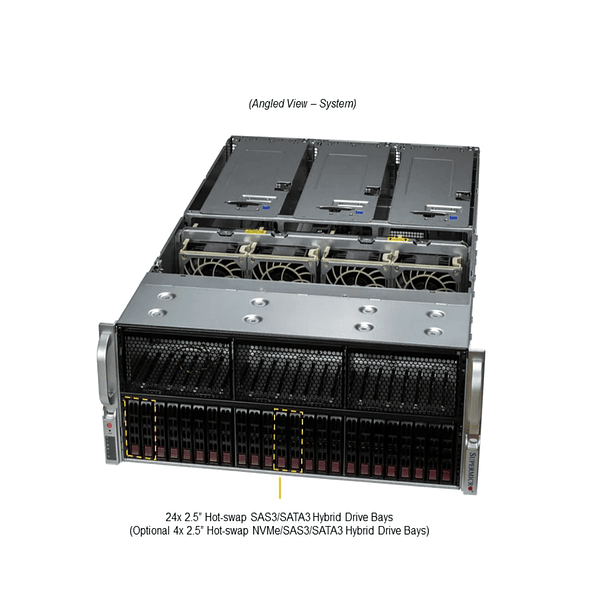

- Rack Space: The AS-4125GS-TNRT2 is a 4U server. Make sure you have enough rack space available and consider future expansion plans.

- Consider keeping the 1U of space above and below the server free.

- Never install more than 4 servers of this type in a rack.

2. Initial System Configuration

- BIOS/UEFI:

- Update your BIOS/UEFI firmware to the latest version available on the Supermicro website. This often includes compatibility and performance improvements.

- Check your BIOS settings related to PCIe and GPU. Enable PCIe Gen5 mode if necessary and ensure that "Above 4G Decoding" and "Resizable BAR" are enabled to optimize GPU performance.

- Adjust power settings for optimal performance (e.g., "High Performance" mode instead of "Power Saving").

- Operating System:

- Install an operating system that supports NVIDIA H100 NVL GPUs (usually Linux distributions like Ubuntu Server, CentOS, or RHEL).

- Make sure your Linux kernel version is compatible with the latest NVIDIA drivers.

- NVIDIA Drivers:

- Download and install the latest drivers for the NVIDIA H100 NVL directly from the NVIDIA website. The distribution's drivers may not be up to date.

- Install the CUDA Toolkit and cuDNN, which are essential for developing and running AI and HPC applications. Make sure these versions are compatible with your drivers and frameworks.

3. Good Practices for Operation

Constant Monitoring

- Temperature:

- Uses the Supermicro server's Intelligent Platform Management Interface (IPMI) to monitor temperatures of the CPU, GPUs, memory, and other critical components.

- Use NVIDIA monitoring tools like nvidia-smi to check GPU temperature and usage.

- Set alerts for critical temperatures to prevent overheating and throttling.

- GPU Usage:

- Monitors GPU memory and engine usage to identify bottlenecks or underutilization.

- nvidia-smi provides detailed information about GPU usage.

- Energy Consumption:

- Monitor server power consumption through IPMI or rack-mounted power meters. This will help you understand the load and plan capacity.

- System Logs: Regularly review system logs (syslog, IPMI logs) for errors or warnings.

Preventive Maintenance

- Firmware and Software Updates:

- Keep your server's BIOS/UEFI up to date.

- Update your NVIDIA drivers and the CUDA Toolkit regularly to get the latest performance improvements and bug fixes.

- Keep your operating system and software libraries up to date.

- Physical Cleaning:

- Periodically clean the dust from inside the server and the fans. Dust can accumulate and affect cooling efficiency. This should be done with the server powered off and unplugged.

- Cable Check: Make sure all internal cables are securely connected and have not come loose.

Performance Optimization

- Software Configuration:

- CUDA and cuDNN: Make sure your applications are using the optimized versions of CUDA and cuDNN.

- AI Frameworks: Optimize AI frameworks (TensorFlow, PyTorch, etc.) to take full advantage of the H100's Hopper architecture features (Tensor Cores, Transformer Engine, FP8, HBM3).

- NVLink: Ensure your applications are designed to leverage NVLink for high-speed communication between GPUs, especially for large models or distributed training.

- MIG (Multi-Instance GPU): If your workloads are smaller and you want to maximize utilization of a single GPU, consider MIG technology to partition an H100 into multiple GPU instances. However, for four NVL H100s, you'll likely want to dedicate each GPU to intensive tasks.

- Workload Management:

- Schedules task execution to optimize GPU utilization. For example, it prevents multiple jobs from competing for the same GPU resources if they are not optimized for it.

- Consider using container orchestrators (Kubernetes) with the NVIDIA GPU Operator to efficiently manage and schedule GPU workloads.

- Energy and Performance Profiles:

- Use nvidia-smi -q -d PSTATE to monitor the performance status of the GPU.

- You can configure GPU power profiles using nvidia-smi if you need to limit power consumption or performance for specific tasks, although for the H100 in this server, maximum performance is typically the goal.

Security

- Remote Access (IPMI): Protect IPMI access with strong passwords and consider using a separate management network.

- Security Updates: Regularly apply security patches to the operating system and all installed programs.

- Firewall: Configure a firewall to restrict unauthorized access to the server.

5. Backup and Recovery

- Regularly back up your important data and settings.

- Have a disaster recovery plan in case of hardware or software failures.

By following these best practices, you can ensure that your Supermicro AS-4125GS-TNRT2 server with NVIDIA H100 NVL 94GB GPUs runs optimally, stably, and efficiently for your most demanding AI and HPC workloads. Always remember to consult the official Supermicro and NVIDIA manuals for the most accurate and model-specific information.

4. Calculate the consumption of the solution.

Calculating the total power consumption of a Supermicro AS-4125GS-TNRT2 server with four NVIDIA H100 NVL 94GB GPUs involves adding up the power consumption of its main components at maximum load. It's important to note that actual power consumption will vary depending on the specific workload.

Here we break down the estimated consumption:

NVIDIA H100 NVL 94GB GPU Power Consumption

- Each NVIDIA H100 NVL 94GB PCIe has a maximum TDP (Thermal Design Power) of 400W . Some sources specify a range of 350W–400W, but for infrastructure calculations, it's always best to use the maximum value.

- With 4 H100 NVL GPUs : 4×400W=1600W

Processor (CPU) consumption

The AS-4125GS-TNRT2 supports two AMD EPYC™ 9004/9005 Series processors. The TDP of these processors can vary significantly, from 125W to 400W per CPU, depending on the exact model and configuration.

- CPU TDP Range: Generally, for servers of this caliber, CPUs with high TDPs are usually used. If we assume CPUs with a 360W–400W TDP (e.g., EPYC 9554, 9654, 9754, 9754S, 9574F, 9564, etc.), then:

- 2 x 360W CPUs: 2x360W=720W

- 2 x 400W CPUs: 2x400W=800W

Consumption of Other Server Components

This includes the motherboard, RAM, storage (NVMe/SATA), fans, network controllers, and the IPMI chip. These components also contribute to total power consumption, although to a lesser extent than the GPUs and CPUs.

- RAM: 24 DDR5 DIMM slots. Each DDR5 module can consume between 5W and 15W depending on the capacity and load type. For 24 modules (e.g., 24x64GB or 24x128GB), this can add up to between 120W and 360W .

- Storage (NVMe/SATA): High-performance NVMe storage devices can consume between 10W and 25W each under load. SATA HDDs/SSDs generally consume less. If you have, for example, 8 NVMe storage devices: 8x20W = 160W.

- Motherboard, fans, chipset, etc.: Estimate between 100W and 300W for these base components, depending on the activity of the fans (which adjust to the thermal load).

Calculation of Estimated Total Consumption (Peak Load)

Summing the high ranges for a "worst case" or maximum load estimate:

- GPUs: 1600W

- CPUs: 800W (assuming 2x 400W TDP)

- RAM: 360W (assuming 24x 15W DIMMs)

- Storage: 160W (assuming 8x 20W NVMe)

- Motherboard/Misc: 300W

Estimated Total Consumption (Maximum Load): 1600W+800W+360W+160W+300W=3220W

Maximum consumption in BTU/h (1W=3,412 BTU/h) : 10,987 BTU/hour

Additional Considerations:

- Power Supplies: The Supermicro AS-4125GS-TNRT2 is equipped with 4 redundant 2000W (2+2) Titanium level power supplies . This means the total installed power supply capacity is 8000W, but in a 2+2 configuration the system can operate at 4000W redundantly (two supplies covering the load and two as backup) or up to 8000W if all supplies are used for the load. This 3220W value is within the redundancy capacity.

- Power Supply Efficiency: Titanium-level power supplies are very efficient (typically 94-96% efficient at medium loads). Power consumption measured at the wall outlet will be slightly higher than the sum of the components' TDPs due to this inefficiency. For 3220W drawn by the components, the power drawn from the wall would be approximately 3220W/0.96≈3354W.

- Actual vs. Theoretical Load: This is a theoretical maximum load calculation. In practice, actual power consumption will depend on the type of workload. For example, in AI training or HPC tasks, GPUs and CPUs may be at nearly 100% utilization, which would be close to this maximum value. For lighter or idle loads, power consumption will be significantly lower.

- Monitoring: As mentioned in the previous answer, the best way to know the exact consumption is to monitor it in real time through the server's IPMI or a power meter in the rack.

In short, a Supermicro AS-4125GS-TNRT2 with four NVIDIA H100 NVL 94GB GPUs can easily consume over 3000W (3kW) under intensive GPU and CPU workloads. It's crucial that your power infrastructure (PDUs, electrical circuits) can sustain this demand continuously, in addition to considering the heat it will generate.