Configure Supermicro Solutions to Engineer Artificial Intelligence with Data

1. Assessing your AI project needs:

- Workload type: Is it primarily for AI model training (which requires a lot of GPU power and VRAM) or for inference (which can be less GPU-intensive but requires low latency)?

- Data scale: Will you be working with terabytes, petabytes, or more? This will influence the type and amount of storage needed.

- Processing speed: Do you need real-time performance or can you tolerate longer processing times?

- Budget: AI solutions can vary significantly in cost. Define your budget constraints.

- Space and Cooling: High-density AI solutions, especially those using many GPUs, generate a lot of heat and require adequate cooling infrastructure (liquid or air).

2. Supermicro Hardware Selection:

Supermicro specializes in offering "Building Block Solutions," allowing you to choose optimized components.

- GPU Servers (most important for AI):

- 8U/10U GPU Lines: For large-scale AI training and HPC applications, with modular designs.

- 4U/5U GPU Lines: Maximum acceleration and flexibility for AI/Deep Learning and HPC.

- 2U GPU Lines: High-performance and balanced solutions.

- 1U GPU Lines: Higher-density GPU platforms for deployments from the data center to the edge.

- GPU Options: Supermicro offers servers compatible with the latest GPUs from NVIDIA (such as the HGX H100/H200/B200 series, RTX PRO Blackwell), AMD Instinct (MI300A, MI350 series), and Intel Data Center GPU Max Series. Your choice will depend on your AI frameworks and performance requirements.

- Storage Servers: Crucial for Data Lakes and Data Engineering.

- All-Flash NVMe: For ultra-fast data access, essential for real-time AI training and inference.

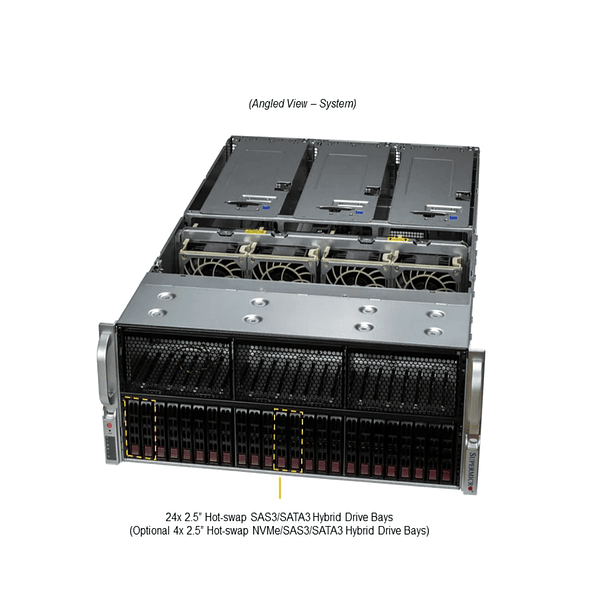

- High-density storage servers (e.g., 90-bay top-loading): For large volumes of data where capacity is a priority.

- JBOD (Just a Bunch Of Disks): To expand storage capacity.

- Software-Defined Storage (SDS) Support: Supermicro works with partners such as Pure Storage, WekaIO, Cloudian, and others to develop AI-optimized storage solutions.

- CPU: Servers with Intel Xeon or AMD EPYC processors, which provide the computing power for data preprocessing, the AI orchestrator, and other general system tasks.

- Networking: High-speed, low-latency connectivity (10GbE, 400GbE) is vital for moving large data sets between storage, GPUs, and cluster nodes.

- Cooling: For high-density AI configurations, Supermicro offers liquid cooling solutions (Direct-to-Chip, Rear Door Heat Exchangers, Immersion Cooling) that can improve power efficiency and density.

3. Software and Orchestration:

Supermicro not only provides hardware, but also facilitates software integration and infrastructure management.

- AI/Data Software Platforms:

- Cloudera: Supermicro partners with Cloudera to provide enterprise solutions that integrate open source components such as Apache Kafka, NiFi, Flink (for data streaming), Apache Spark (for data engineering and processing), Hadoop File System (HDFS), Impala, Hive, Iceberg (for data warehousing), and Kubernetes (for container management).

- NVIDIA AI Enterprise: A suite of software optimized for AI development and deployment on NVIDIA-certified systems.

- Container Orchestration: Kubernetes is essential for managing and scaling AI workloads. Supermicro makes it easy to deploy.

- Supermicro Management Tools:

- SuperCloud Composer (SCC): Enables management and orchestration of infrastructure at the data center level.

- SuperCloud Orchestrator: For automating cluster deployment.

- Vector Databases: For GenAI, solutions such as Milvus Distributed, KX, pgvector for PostgreSQL, Elasticsearch, and Neo4j are supported.

4. Configuration and Implementation Process:

- Consultation and Design: Supermicro offers consulting services to design the solution that best suits your specific AI and data needs. This includes defining your data center architecture, floor plans, rack elevations, and bills of materials.

- Integration and Validation: Supermicro can pre-integrate and test the complete solution in the factory (rack-scale integration), including hardware and software. This reduces on-site deployment times.

- On-site deployment: They offer professional on-site deployment services.

- Support and Maintenance: Comprehensive support to ensure the continuous operation of your AI infrastructure.

Key considerations for data engineering with AI:

- Data Pipeline: Design a robust pipeline for ingesting, processing, storing, and delivering data to AI models. Supermicro supports this with solutions for data streaming (Kafka, NiFi, Flink), data engineering (Spark), and data lakes (HDFS, NVMe storage).

- GPU Direct Storage: To eliminate bottlenecks, this technology allows data to be moved directly from network storage to GPU memory, bypassing the CPU.

- Data security and governance: Ensure that data systems respect customer privacy and comply with regulations. Platforms like Cloudera integrate data provenance and security features.

- MLOps and DevOps: Integrate automation workflows for the AI model development lifecycle, from training to inference and deployment.

In short, setting up a Supermicro solution for data-driven AI engineering involves carefully selecting high-performance GPU servers, scalable, high-speed storage solutions, and integrating open source and proprietary software for data management and AI orchestration. Supermicro offers a "building block" approach and comprehensive services to simplify this process and accelerate implementation.