Setting up a Proxmox solution with servers

Description

Setting up a solution with Supermicro Petascale servers and Proxmox is a major project, but the benefits in terms of performance and availability are significant.

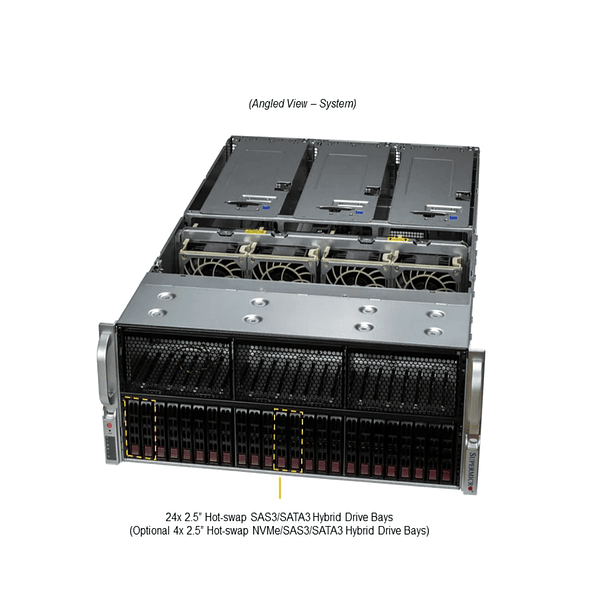

Understanding Supermicro Petascale Servers for Proxmox

Supermicro Petascale servers focus on delivering extreme storage density and performance, heavily utilizing high-capacity NVMe SSDs (such as the E1.S and E3.S PCIe Gen5 form factors). This is ideal for workloads requiring low latency and high I/O throughput, such as:

- High-performance virtualization: Proxmox can host virtual machines (VMs) and containers that will greatly benefit from this fast storage.

- Databases: Ideal for transactional or analytics-intensive databases.

- AI/ML and HPC: While some Petascale models include GPUs, the primary focus is on storing massive amounts of data for these workloads.

- Software-defined storage (SDS): Proxmox with Ceph or ZFS can take full advantage of the capacity and speed of these drives.

Proxmox Solution Design with Supermicro Petascale

Let's consider a Proxmox VE cluster for high availability and scalability.

1. Compute/Storage Nodes (Supermicro Petascale Servers):

- Recommended Models (Examples):

- Supermicro Petascale All-Flash NVMe Servers (e.g. 1U models with 16 or 2U models with 32 E3.S/E1.S bays): These would be the base. They allow for incredible NVMe PCIe Gen5 density. Look for models with 4th/5th Gen Intel Xeon Scalable or AMD EPYC 9004 Series processors for a balance of cores and PCIe performance. Some models even incorporate NVIDIA Grace CPU Superchips for very specific AI/HPC workloads that benefit from the ARM architecture and LPDDR5X memory.

- Configuration per Node:

- CPUs: Latest-generation Intel Xeon Scalable or AMD EPYC processors. The number of cores and frequency will depend on the expected workload of the VMs. For virtualization, a good number of cores is beneficial.

- RAM: As much RAM as possible for your needs. Proxmox uses RAM for the host OS, the ZFS cache (if you're using it for storage), and, of course, for the VMs. 256GB, 512GB, or even 1TB+ per node are common in Petascale environments. Consider DDR5 for the latest processors.

- Storage (NVMe Drives): This is where Petascale shines.

- Proxmox OS Drives: Two small NVMe drives (e.g. 240GB-480GB) in RAID1 (ZFS mirror) for the Proxmox OS.

- VM/Container/Storage Drives: Remaining high-capacity NVMe bays (e.g., 15TB, 30TB, or more) for primary storage.

- Option A: ZFS on each node (local): You can set up ZFS pools with RAIDZ, RAIDZ2, or mirrors directly on each node using these NVMe drives. Proxmox has excellent integration with ZFS. This is easier to manage for local VM storage.

- Option B: Ceph (Distributed Storage): If you need shared, highly available, and scalable storage across cluster nodes, Ceph is an excellent choice. The NVMe drives on the Petascale servers would be Ceph's OSDs, providing exceptional performance. You'd need at least three nodes for a robust Ceph cluster.

- Networking: This is crucial for a Proxmox cluster and even more so with Petascale/Ceph storage.

- Management/VM Network: At least two 10GbE or 25GbE ports per node (LACP bonded or active/passive) for VM connectivity and Proxmox management.

- Ceph Network/Storage (if applicable): If you're using Ceph, it's highly recommended to have a dedicated 25GbE, 50GbE, or even 100GbE network for Ceph traffic (both public and replication). This will prevent bottlenecks and ensure NVMe performance.

2. Proxmox VE Cluster Configuration:

- Installation: Install Proxmox VE on each Supermicro server.

- Network Configuration: Configure network interfaces appropriately for management, VMs, and storage traffic (if Ceph).

- Cluster Creation: Joins all nodes to form a Proxmox VE cluster for high availability (HA) of VMs and live migration.

- Storage:

- ZFS Local (if not using Ceph): Set up ZFS pools on each node for local VM storage. You can replicate VMs between nodes using Proxmox Backup Server or ZFS replication tools.

- Ceph (for shared storage/SDS):

- Install Ceph components on each Proxmox node.

- Configure NVMe as Ceph OSDs (Object Storage Daemons).

- Create Ceph pools with the desired replication level (e.g. 3x replication for high redundancy).

- Proxmox will integrate directly with Ceph storage, allowing VMs to be created on these distributed pools.

- Proxmox Backup Server (Optional but highly recommended):

- Set up a dedicated backup server (this can be another Supermicro server, not necessarily Petascale, but with good HDD capacity and network connectivity).

- Integrate Proxmox Backup Server with your Proxmox cluster to perform efficient, deduplicated backups of your VMs and containers.

- High Availability (HA): Configure HA resources in Proxmox so that critical VMs are automatically restarted on another node in the cluster in the event of a node failure.

3. Additional Considerations:

- Power Redundancy: Ensure Supermicro Petascale servers are connected to redundant power sources (UPS, dual PDUs).

- Monitoring: Implements robust monitoring for Supermicro hardware (IPMI, sensors) and Proxmox/Ceph cluster status.

- Maintenance: Schedule maintenance windows for Supermicro firmware updates and Proxmox patches.

- Distributor: Remember that for Chile, Super Latam is the most important authorized Supermicro distributor . They can advise you on the selection of the specific Petascale models that best fit your budget and needs, and provide you with local support.

- Documentation: Proxmox's documentation is excellent for setting up clusters, ZFS, and Ceph.

- Training and Experience: If you have no prior experience with Ceph or complex Proxmox clusters, consider expert training or support.

Advantages of this Solution:

- Extreme Performance: Supermicro Petascale NVMe drives combined with Proxmox and Ceph/ZFS will deliver exceptional I/O performance.

- High Availability: The Proxmox cluster with HA and Ceph storage ensures your services stay online even if a node fails.

- Scalability: You can scale both compute capacity (by adding more Proxmox nodes) and storage (by adding more OSDs to Ceph).

- Flexibility: Proxmox allows you to run a mix of virtual machines and containers (LXC), giving you flexibility for different workloads.

- Efficiency: Using Proxmox (open source) and the energy efficiency of Supermicro servers (part of their "We Keep IT Green®" initiative) can help reduce operating costs.

Setting up a solution with Supermicro Petascale servers and Proxmox is a major project, but the benefits in terms of performance and availability are significant.

You might be interested in these