Setting up a hyperconverged infrastructure (HCI) with Supermicro servers and Proxmox VE (Virtual Environment)

Setting up a hyperconverged infrastructure (HCI) with Supermicro servers and Proxmox VE (Virtual Environment) is a great option for many businesses and enthusiasts, as it combines the flexibility of Proxmox with Supermicro's robust hardware.

Here's a step-by-step guide to setting up your HCI, including important considerations and best practices:

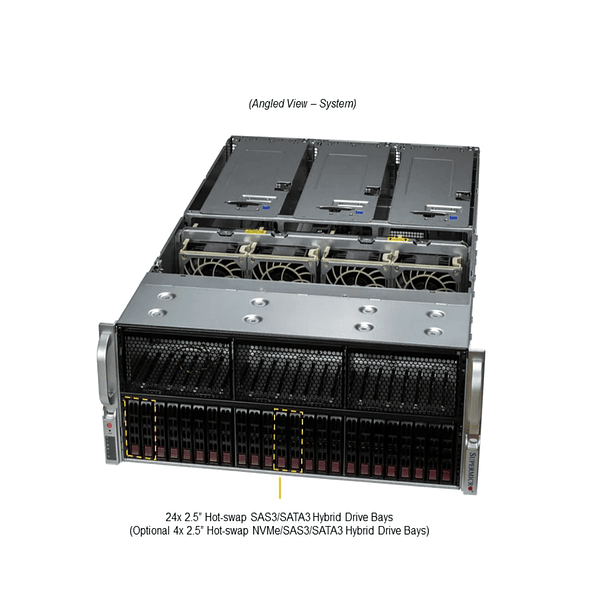

1. Planning and Hardware (Supermicro)

The choice of Supermicro hardware is crucial to the performance and reliability of your HCI.

- Number of Nodes:

- Minimum 3 nodes: For Proxmox with Ceph (the recommended distributed storage for HCI), a minimum of 3 nodes is recommended to ensure redundancy and resiliency. With 3 nodes, you can tolerate the failure of one node and still maintain quorum and data availability.

- 5 or more nodes: For critical production environments and greater fault tolerance (e.g., tolerating the failure of two nodes), 5 or more nodes are recommended. An odd number of nodes is preferable for quorum in a cluster.

- CPU: Intel Xeon (Scalable) or AMD EPYC processors are ideal. Make sure you have enough cores and clock speed for your expected workloads.

- RAM: RAM is critical for Proxmox and Ceph.

- Proxmox VE: Requires RAM for the OS and VMs/LXC.

- Ceph: Ceph consumes a significant amount of RAM (approximately 1-2 GB per TB of OSD storage, although this varies). Plan for at least 64 GB per node, and preferably 128 GB, 256 GB, or more, depending on storage capacity and VMs. Use ECC RAM.

- Proxmox OS disk: A small SSD (128GB–256GB) for installing Proxmox. RAID 1 (hardware or ZFS mirror) can be used for the OS disk for increased reliability.

- Disks for Ceph OSDs:

- NVMe SSDs: These are the best choice for Ceph OSDs due to their high performance and low latency, ideal for IOPS-intensive workloads.

- Enterprise SATA SSDs: A good choice for balancing performance and cost.

- SAS HDDs with SSDs for WAL/DB: If you need a lot of storage capacity at a lower cost, you can use SAS HDDs for the OSDs and dedicated SSDs for the Ceph Write-Ahead Log (WAL) and Database (DB), which significantly improves write performance. However, for HCI, all-flash (NVMe/SSD) solutions are generally preferable.

- Quantities: At least four OSDs per node are recommended, evenly distributed. Use enterprise-grade disks with power loss protection (PLP) for Ceph.

- Avoid hardware RAID for OSDs: Ceph manages redundancy at the software level. It's best to present Ceph disks directly to the operating system ("IT mode" on RAID/HBA controllers, or directly if they're NVMe).

- 10GbE or higher: Absolutely essential for Ceph traffic (public and private) and VM migration. Consider 25GbE or even 40/100GbE for high-demand environments.

- Multiple NICs: It is recommended to have dedicated NICs for:

- Ceph network (public and private): A pair of 10GbE+ ports for this, ideally on a separate VLAN.

- VM/Client Network: Another pair of 10GbE+ ports for virtual machine traffic.

- Management Network/Corosync: This can be 1GbE, but can also be combined with the VM network if traffic isn't excessive. Corosync requires low latency.

2. Installing Proxmox VE on each Node

- Download the ISO: Download the latest Proxmox VE ISO from the official website.

- Create installation media: Use a tool like Rufus or Balena Etcher to create a bootable USB, or mount the ISO via IPMI on your Supermicro servers.

- Facility:

- Boot from the installation media on each Supermicro server.

- Follow the on-screen instructions.

- Make sure to install Proxmox on the disk/RAID dedicated to the operating system.

- Configure the IP address, subnet mask, gateway, and DNS for each node. Use fixed IP addresses.

3. Proxmox Cluster Configuration

Once Proxmox VE is installed on all nodes:

- Create the Cluster (from the first node):

- Access the Proxmox web interface ( https://<node_IP>:8006 ).

- Go to Datacenter -> Cluster.

- Click Create Cluster.

- Give the cluster a name and click Create.

- Copy the Join Information displayed.

- Access the Proxmox web interface on each remaining node.

- Go to Datacenter -> Cluster.

- Click Join Cluster.

- Paste the join information copied from the first node.

- Enter the root user password of the first node.

- Select the network interface for cluster traffic (Corosync). Ideally, use a dedicated network interface or one of your 10GbE interfaces.

- 7. Click Join. Repeat for all nodes.

4. Storage Configuration (Ceph)

Ceph is the key component for hyperconverged storage in Proxmox.

- Check Disks: On each node, go to Storage and make sure all the disks you want to use for Ceph OSDs are visible and not in use.

- Initialize Ceph (on one node of the cluster):

- Go to Datacenter -> Ceph.

- If this is your first time, click Install Ceph. Follow the steps to install the necessary packages.

- On the same node (or any node in the cluster), go to Datacenter -> Ceph -> OSDs.

- Click Create OSD.

- Select the disks you want to add as OSDs on that node.

- Check SSD/NVMe if you are using those types of drives to optimize performance.

- Click Create. Repeat this process on each node to add their respective disks as OSDs. Ceph will automatically distribute the Placement Groups (PGs) and data across all OSDs in the cluster.

- Go to Datacenter -> Ceph -> Pools.

- Click Create.

- Define a name for your pool (e.g. rbd-storage).

- Replication Factor: For high availability, a replication factor of 3 (data triplication) is standard, meaning each piece of data will have three copies on different OSDs. This allows you to lose up to two OSDs (or one node if the OSDs are on different nodes) without losing data.

- Min Size: The minimum number of replicas required for write/read operations to be possible. Typically set to 2 for a size of 3.

- Crush Rule: Select the appropriate CRUSH rule. By default, replicated_ruleset is common.

- Click Create.

- Go to Datacenter -> Storage.

- Click Add -> RBD (Ceph Block Device).

- Assign an ID for the storage (e.g. ceph-rbd).

- Select the Ceph pool you created.

- Check the types of content you want to store (Disk Images, Containers, etc.).

- 6. Click Add. You'll now have shared storage available on all nodes in the cluster.

5. Network Configuration (Bridging)

Proxmox uses network bridges (Linux bridges) to connect VMs and containers to the physical network.

- Configuration on each node:

- Go to System -> Network on each node.

- Identify your physical network interfaces (e.g. enp1s0, enp2s0).

- Create a new Linux Bridge (vmbr0, vmbr1, etc.).

- Assign one of your physical interfaces to the bridge (e.g. Bridge ports: enp1s0).

- Configure the IP address and gateway on the bridge whether it is for the management or VM network.

- Repeat for the network interfaces dedicated to Ceph, if you have any, by creating separate bridges or configuring VLANs.

- Apply the settings.

6. High Availability (HA)

Proxmox allows you to configure high availability for VMs and containers, which means they will automatically restart on another node if the primary node fails.

- Configure HA Resources:

- Go to Datacenter -> HA -> Resources.

- Click Add.

- Select the VM or container you want to protect with HA.

- Make sure the VM/Container is stored in Ceph storage (RBD), as this allows it to be accessible from any node.

- 5. Configure the "Restart on boot" and "Max Restarts" options.

7. Monitoring and Maintenance

- Ceph Monitoring: The Proxmox web interface provides an overview of Ceph status, including cluster health, OSDs, PGs, and performance.

- Updates: Keep Proxmox VE and your Supermicro servers firmware up to date.

- Backups: Implement a robust backup solution, such as Proxmox Backup Server (PBS), to protect your VMs and data.

- ● Recovery Testing: Perform controlled failure testing to ensure your cluster recovers as expected.

Additional Considerations and Best Practices

- Supermicro BIOS/Firmware: Ensure your Supermicro servers' BIOS and firmware are updated to the latest version to ensure compatibility and optimal performance with Proxmox.

- Drivers: Proxmox is based on Debian, so drivers are generally well supported. However, on very new or specific hardware, it may be necessary to check for driver availability.

- Disk Performance: Use tools like fio to test your disk performance before setting up Ceph and ensure you meet the requirements.

- Network Separation: For optimal performance and security, it is highly recommended to separate Ceph traffic (Ceph private network), Proxmox management traffic (Corosync), and VM/client traffic into separate VLANs or even physical interfaces.

- Quorum: With an even number of nodes, the loss of half of the nodes can lead to a loss of quorum. An odd number of nodes is more resilient to this problem. For two-node clusters, an external Quorum Device (QDevice) is required to maintain quorum in the event of a node failure.

- ● Documentation: Document your configuration, including IP addresses, hostnames, network settings, and any customizations.

By following these steps and considerations, you'll be able to set up a robust and efficient HCI infrastructure with Supermicro and Proxmox VE.