Setting up a Data Streaming Solution with Supermicro

Supermicro offers a wide range of servers and components ideal for building high-performance data streaming solutions. To configure a detailed solution, we must consider several key aspects. Here's a configuration using Supermicro components, focusing on the high availability, low latency, and high throughput required for data streaming:

Data Streaming Solution with Supermicro

Data streaming involves the ingestion, processing, and analysis of large volumes of data in real time. This requires servers with high processing power, fast storage, and a low-latency network.

Suggested General Architecture:

I would propose a distributed architecture with clusters, using specific Supermicro components for each layer:

- Ingestion Layer (Front-End/Edge): Collects data from various sources.

- Processing Layer (Middle-Tier): Performs transformations, filtering and analysis in real time.

- Storage Layer (Back-End): Persists processed data for later analysis or long-term storage.

Supermicro Components Detailed:

1. Ingestion Layer (Edge Computing / Front-End Servers)

For data ingestion, especially if it comes from multiple distributed sources or IoT devices, we need servers with good I/O capacity and flexible connectivity options.

- Suggested Servers:

- Supermicro Edge & Telecom Servers (e.g., Fanless Edge Systems or Compact Edge Systems): For scenarios where data is generated at the edge of the network, these compact and robust servers are ideal. They offer low latency and can perform basic preprocessing before sending data to the main cluster.

- Supermicro WIO (Wide I/O) Servers (e.g. SYS-1115SV-WTNRT or SYS-2015SV-WTNRT): These servers are optimized for a wide variety of I/O options, making them excellent for connecting multiple data sources and handling high volumes of inputs.

- Key Specifications per Ingestion Server:

- CPU: Latest generation Intel Xeon Scalable processors (5th/4th Gen) or AMD EPYC (8004 series or higher) for good packet processing performance and light tasks.

- RAM: Minimum 64GB DDR5, expandable to 128GB or more if complex transformations are performed at this level.

- Storage:

- A pair of NVMe M.2 for the operating system and logs (raid 1).

- A few NVMe U.2 or 2.5" SATA SSDs for temporary data buffering (Hot-Swap for ease of maintenance).

- Network (Crucial): Multiple 10GbE Ethernet ports (RJ45/SFP+) or even 25GbE to ensure adequate data throughput to the processing layer. High-quality network cards (NICs) are essential. Consider NICs with CPU offload capabilities.

2. Processing Layer (Middle-Tier Servers)

This is the core layer where the intelligence of streaming resides (e.g., Apache Kafka, Apache Flink, Apache Spark Streaming, etc.). It requires high computing power, low latency, and high network capacity.

- Suggested Servers:

- Supermicro TwinPro® or BigTwin® (e.g., SYS-2029TP-HC1R or SYS-2029BT-HNC0R): These multi-node servers in a single chassis are excellent for streaming solutions. They enable high compute density and share resources such as power and cooling, optimizing rack space and energy efficiency. Each node can run a single Kafka/Flink instance.

- Supermicro Ultra Series (e.g. SYS-621U-TNR4T or SYS-221U-TNR4T): These are high-performance, highly flexible and configurable general-purpose servers ideal for demanding data processing workloads.

- Supermicro Hyper Series (e.g. SYS-121C-TN10R or SYS-221U-TR4T): They offer high configuration and performance, being an excellent option for processing clusters.

- Key Specifications per Processor Server (or per node in Twin/BigTwin):

- CPU: Dual Intel Xeon Scalable (5th/4th Gen) or AMD EPYC (9004 Series or higher) processors with high core counts and high frequencies to handle intensive processing operations.

- RAM: Minimum 256GB DDR5 per node/server, expandable to 512GB or more. Data streaming is often memory-intensive, especially for windowing or join operations.

- Storage:

- 2x NVMe M.2 for the operating system and streaming application logs.

- Multiple NVMe U.2 drives (4 to 8 per node/server) for Kafka log storage, Flink state, or Spark caches. NVMe's low latency and high IOPS are crucial here.

- Network (Critical): Multiple 25GbE or 100GbE Ethernet ports (with compatible Supermicro switches) for inter-node communication within the cluster and with the ingestion and storage layers. This is critical for high throughput and low latency between cluster components.

3. Storage Layer (Back-End Storage Servers)

Once the data is processed, it can be persisted in a data lake or database for further analysis, machine learning, or compliance.

- Suggested Servers:

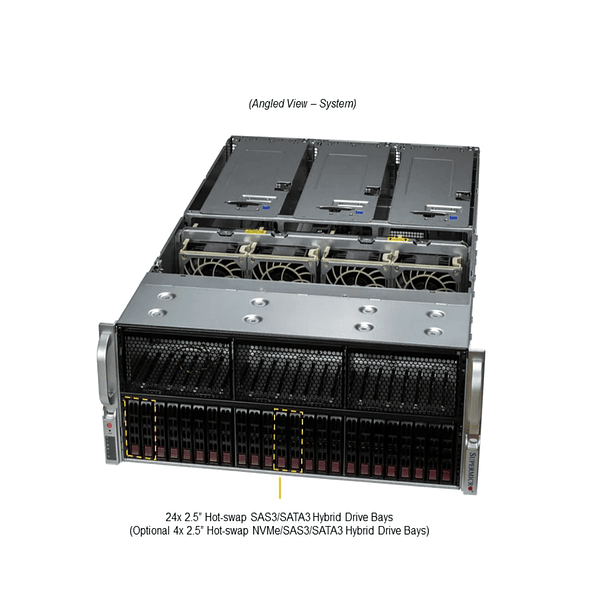

- Supermicro All-Flash NVMe Storage Servers (e.g., SSG-6049P-E1CR45L or solutions with EDSFF/E3.S): For storing processed data that still requires fast access, such as for near-real-time analytics or serving as a source for dashboards. All-Flash NVMe servers provide the lowest latency and highest IOPS.

- Supermicro Petascale Storage Servers or Simply Double High Density Servers (e.g., SSG-6049P-E1CR45L or SSG-6049P-E1CR45H): For Data Lake applications, where large volumes of data are stored at a more cost-effective level. These servers offer a high density of HDD/SSD drives, balancing capacity and performance. They can be configured with a mix of SSDs (for caching) and HDDs (for mass storage).

- Key Specifications per Storage Server:

- CPU: Intel Xeon Scalable or AMD EPYC processors (can be a configuration with fewer cores, focusing on energy efficiency and storage I/O capacity).

- RAM: Enough RAM (128GB to 256GB) to handle metadata and data caching.

- Storage:

- NVMe: For fast databases or hot data tiering.

- SATA/SAS SSD: For data with intermediate performance requirements.

- SAS/SATA HDD: For long-term, large-scale data storage (Data Lake). RAID configuration (hardware or software) is recommended for redundancy.

- Optional: A dual-controller NAS like the one you mentioned above, if high reliability and availability for file storage is a top priority and it integrates well with the data streaming ecosystem (e.g., for an NFS Data Lake). However, a Supermicro dedicated storage server offers more flexibility and power for big data workloads.

- Network: Multiple 25GbE or 100GbE Ethernet ports for fast and efficient data transfer to and from processing servers.

4. Network (Network Infrastructure - Very Critical)

The network is the most common bottleneck in data streaming solutions.

- Supermicro (or compatible) switches:

- Top-of-Rack (ToR) Switches: Low-latency 25GbE or 100GbE switches (e.g. Mellanox/NVIDIA or Broadcom) with a high port count to interconnect all servers.

- Backbone connectivity: 100GbE or 400GbE core switches for traffic between racks and to primary storage.

- Network Interface Cards (NICs): All network cards on the servers should be 25GbE or 100GbE, preferably with RDMA (RoCE) capability for low-latency communications between nodes (especially useful for Kafka and Spark).

5. Software and Orchestration:

- Streaming Platforms: Apache Kafka (as a distributed message broker), Apache Flink or Apache Spark Streaming (for real-time processing).

- Databases/Data Lake: Apache Cassandra, Apache HBase, Elasticsearch for indexed data, or HDFS/S3-compatible storage for the Data Lake.

- Orchestration and Monitoring: Kubernetes (for containerization), Prometheus/Grafana (for monitoring), and CI/CD tools for deployment.

Additional Considerations:

- High Availability and Resilience: All components must be redundant (power supplies, network cards, disk RAID, cluster nodes). The distributed architecture of streaming applications (Kafka, Flink, Spark) already offers inherent high availability, but the hardware must complement it.

- Scalability: The solution must be horizontally scalable. Supermicro facilitates this with its Twin chassis and servers that allow for the easy addition of more nodes or servers.

- Management: Use SuperDoctor 5 or other Supermicro management tools to monitor hardware status, temperature, and performance.

- Cooling: For a high-density setup, consider Supermicro's cooling options, including liquid cooling systems if the density is extreme.

- Local Distributor in Chile: Remember that for Chile, Super Latam is the most important authorized Supermicro distributor . They can advise you and provide specific hardware with local support.

By working with Super Latam, you can further fine-tune this configuration with specific Supermicro models that fit your budget and exact data streaming workload requirements.